Introduction

This was for the UofT course MIE443: Mechatronics Systems: Integration and Design. Each group was assigned a Turtlebot2 to program control systems and complete three challenges:

- Mapping an enclosure

- Travel to and catalogue 5 objects before returning “home”

- Follow a user and react to the enviroment with emotions

I worked with Christine, Dave and Jack. Together, we all developed the strategies and control system architecture, wrote and tested the code on the turtlebot2, and wrote reports detailing our methodology and results.

Basic Infomation

|

A turtlebot is an open-sourced personal robot that runs on ROS. It comprises of a Kabuki base with motorized wheels and bumer sensor, a kinect sensor and some additional strcuture. |

Mapping an Enclosure: Object Avoidance and Autonomous Mapping

In this contest, I collaborated to tune the laser scan code and code the exploration strategy.

The goal was to autonomously explore an unknown enclosure while using the built-in mapping capabilities.

| The objectives, in descending priorty, were to: | The constraints were: |

|---|---|

| - Cover the entire area. - Accurately map the area - Reduce the time spent, with a max limit of 8 minutes. - Avoid using the contact sensors: they were included to protect the robot, but were to be avoided. |

- that the map must be formed from sensory data by the Kinect. - that the robot must be fully automonous, no human intervention. - a speed limit of 0.25m/s, and of 0.1m/s near obstacles. - that vel_pub was the only allowed publisher, eliminating pre-packaged exploration and object avoidance code. |

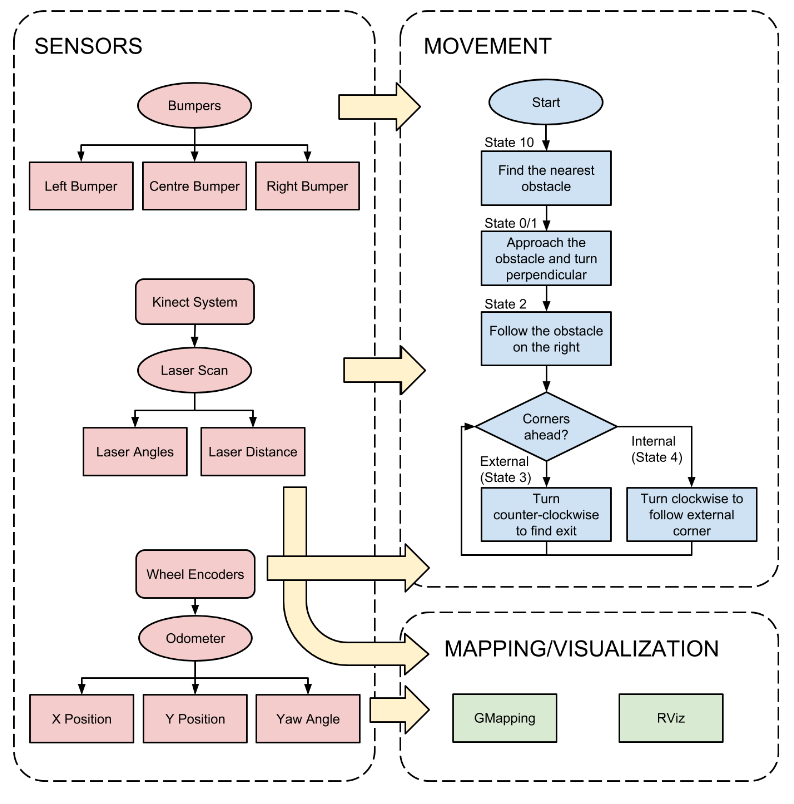

How the sensors, code and motors work together

Click to see the Control Architecture Flowchart!

Diagram credit to Christine for drawing it

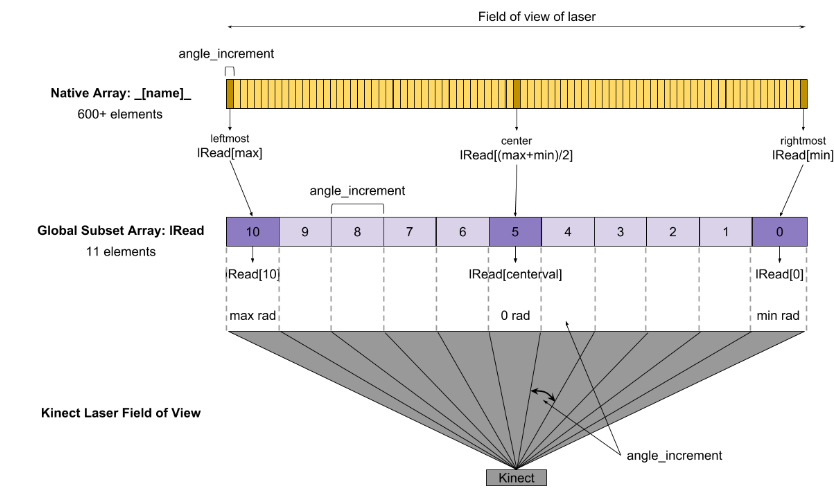

How we got distance data

The kinect is not an actual simple laser sensor. However, it can be used as such and the process for this was pre-packaged into a laser sensor array with over 600 elements. We discretized it into 10 by average the points for easier use and coding.

With this, we now had information on how close an object was to the front of the Turtlebot2 at each angle - This formed the basis of our object avoidance algorithm and thus our exploration code.

Click to see the kinect sensor discretation process!

Image credit to the course for providing it

Find and Catalogue Objects: Path Planning and Image Identification

In this contest, I was responsible for creating the path planning functions, adjusting the given coordinates of the boxes and sorting all the data so my group could use it. I also collaborated on testing the robot for all purposes.

The goal was to autonomously travel to boxes with given coordinates, catalogue the image on them correct, return to the starting position and then print a text file stating which box had which image. The area had no objects other than the 5 boxes, there were 3 images and 1 blank sheet. Also, one of the images would repeat. We were given a map and coordinates of each box, and were given the 3 images to compare with.

| The objectives, in descending priorty, were to: | The constraints were: |

|---|---|

| - Catalogue the images accurately. - Minimize the time spent, with a max limit of 5 minutes. |

- that the map must be formed from sensory data by the Kinect. - that the robot must be fully automonous. - that vel_pub, move_base and the SURF were the only pre-packaged libraries. |

Here some videos showing successful testruns

| A complete success | Crowded but success! | Short video, static camera |

|---|---|---|

As seen here the Turtlebot2 traveled to each box facing the correct side, correctly identified each image, and returned to its starting position.

Here’s the code for my coordinate tuning and path planning functions

Click to expand!

void tuning() {

// All box locations need to be tuned, because the bot can't be where the box is exactly

double xShift = 0.6, yShift = 0.6, phiTmp = 0;

for(int i=1; i<=5; ++i){

phiTmp = tCoord[i][2];

//edge cases first

if(phiTmp == 0){

tCoord[i][0] += xShift;

continue;

}

else if(phiTmp == pi || phiTmp == -pi){

tCoord[i][0] -= xShift;

continue;

}

else if(phiTmp == pi/2){

tCoord[i][1] += yShift;

continue;

}

else if(phiTmp == -pi/2){

tCoord[i][1] -= yShift;

continue;

}

//adjust x and y coords

tCoord[i][1] += yShift*sin(phiTmp); tCoord[i][0] += xShift*cos(phiTmp);

// orientation needs to flip 180degs, MUST BE AFTER AFTER EVERYTHING ELSE since they rely on phi

if(tCoord[i][2] == 0){

tCoord[i][2] = pi;

}

else if(tCoord[i][2] > 0){

tCoord[i][2] -= pi;

}

else if(tCoord[i][2] < 0){

tCoord[i][2] += pi;

}

} //end for loop

} //end of tuning()

void TSP() {

//brute force path finding

double xd=0, yd=0, pathLength=0, shortest=9999;

double path[5] ={}; // this is to hold the order for comparison simplicity

int counter =0; // THIS IS ONLY FOR TESTING IF IT WORKS

int num =0; // testing, to save which path generated is shortest from counter

for(int i=5; i>0; --i){

disBwGoals[i][i] = 0;

for(int j=i-1; j>=0; --j){

xd = tCoord[i][0]-tCoord[j][0]; yd = tCoord[i][1]-tCoord[j][1];

disBwGoals[i][j] = sqrt(xd*xd + yd*yd); disBwGoals[j][i] = sqrt(xd*xd + yd*yd);

}

}

for(int i=1; i<=5; ++i){

for(int j=1; j<=5; ++j){

if(j==i) { continue; }

for(int k=1; k<=5; ++k){

if(k==j || k==i) { continue; }

for(int m=1; m<=5; ++m){

if(m==j || m==i || m==k) { continue; }

for(int n=1; n<=5; ++n){

if(n==j || n==i || n==k || n==m) { continue; }

pathLength = disBwGoals[0][i] + disBwGoals[i][j] + disBwGoals[j][k] + disBwGoals[k][m] + disBwGoals[m][n] + disBwGoals[n][0];

++counter; //for testing if finds shortest

if(pathLength < shortest){

shortest = pathLength;

num = counter; //TESTING VAR

//save the path order for later, aka the coord row #

path[0] = i; path[1] = j; path[2] = k; path[3] = m; path[4] = n;

}

continue; // this is here to not waste time, since there is only one option

} } } } }

// fill adjustedPath

int tmp = 0; // holds path's saved coord row#

// HOME is always first and last, so just copy it over first

for(int i=0; i<3; ++i){

adjustedPath[0][i] = tCoord[0][i];

}

adjustedPath[0][3] = -1; // HOME doesn't exist in coord, so make it (-) just in case

for(int i=1; i<6; ++i){

tmp = path[i-1];

for(int k=0; k<3;++k){

adjustedPath[i][k] = tCoord[tmp][k];

}

adjustedPath[i][3] = tmp;

}

} //end of TSP()

First the coordinates had be converted. The ones given were for the boxes themselves and the Turtlebot2 needed to be facing them, not knocking them over. This was essentially flipping the orientation by adding/subtracting 180 degrees and using trignometry to shift the x and y coordinates.

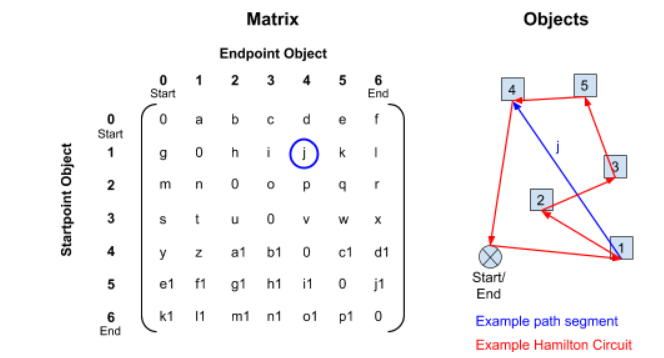

Second, for path planning since we had only 6 points (i.e. origin and 5 objects) I decided to brute force the hamilton circuit. It may be “inefficient” but with only 6 points even the casual laptop for the Turtlebot2 could run it in less than a 1 second so it would’ve been a waste of time trying to make it more efficient.

For the brute force:

- the straight line distances between all possible pairs are calculated

- These path segments are stored in a global diagonally symmetric matrix

- 5 nested for loops are used to calculate every possible path length, and find the shortest

- Store the path in an array. It would store in traveling order the coordinates and tag of each box.

Following a Person and Reacting: A Companionbot

In this contest, I was responsible for creating a function that would recognizably emote as rage and ensuring the machine state triggered and transistioned out correctedly. I also collaborated on testing the robot for all purposes.

The goal was for the robot to identify and follow a user as they move in an envrioment. While it is following, it should be able to interact with the user by displaying 4 emotions. 2 of the emotions must be reflexive, and the other 2 must be delibrative (i.e. not immediate, and changes nature of the reaction with further information.

Here’s the code for my rage emoting code

It would emote rage whenever any of its bumpers sensors are pressed -The basic idea is since the Turtlebot2 will react to hitting an object it did not detect, it will be angry that it got ‘hurt’ and try to physically hit whatever hurt it.

First, the Turtlebot2 will rapidly back up, play a sound bite of someone swearing, and display a rage emoticon image (i.e. (ノಠ益ಠ)ノ).

Next, it will suddenly move forward rapidly toward the obstacle, the acceleration causing the robot to lean backwards and tip. It also plays a sound of a scream of rage and displays an image of a screaming, angry cartoon.

Finally, the robot will stop in place, and slowly rotate until it has spun 90 degrees clockwise while displaying the first rage image. This served as both a transition time to switch back to the base state and to show that the robot was “calming down”.

Click to expand!

//part of a state machine

else if(world_state == 2){ //5 sec total

//--(0) stop bot

if(rage_state == 0){

bumperIsPressed = false; rageTime = secondsElapsed + 2;

std::cout<<"just started"<<endl;

displayImage(path_to_pics+rage_3);

sc.playWave(path_to_sounds+"rage_1_f_word.wav"); // f***!

sleep(1);

angular = 0; linear = 0; rage_state = 1;

}

//--(1) back up for 1sec for 0.5m total (time 1sec)

else if(rage_state == 1){ // rotate back

angular = 0; linear = -0.7;

std::cout<<"backing up"<<endl;

if(secondsElapsed >= rageTime){

sc.stopWave(path_to_sounds + "rage_1_f_word.wav"); //added but doesn't work well

rage_state = 2; rageTime = secondsElapsed + 2; angular = 0; linear = 0;

displayImage(path_to_pics+rage_2);

sc.playWave(path_to_sounds+"rage_1_roar.wav"); // ra!

sleep(1);

}

}

//--(4) move forward again 0.7 (total 0.5m) for 1 sec (5sec total) (+180deg)

else if(rage_state == 2 ){

angular = 0; linear = 0.7;

std::cout<<"ha!"<<endl;

if(secondsElapsed >= rageTime){

sc.stopWave(path_to_sounds + "rage_1_roar.wav");

rage_state = 3; rageTime = secondsElapsed + 2;

displayImage(path_to_pics+rage_3);

sc.playWave(path_to_sounds+"rage_1_iku.wav"); // huff!

sleep(1);

}

}

//--(5) sprin -90 (90deg total) (total 0.5m) for 1 sec (7sec total)

else if(rage_state == 3 ){

angular = -pi/2; linear = 0;

std::cout<<"ptoey"<<endl;

if(secondsElapsed >= rageTime){

rage_state = 6;

}

}

else if(rage_state == 6){

bumperIsPressed = false;

std::cout<<"returning to 2"<<endl;

world_state = 0; rage_state = 0; rageTime = 9999; angular = 0; linear = 0; targetTime = 9999;

}

vel.angular.z = angular; vel.linear.x = linear; vel_pub.publish(vel);

}

Notes

A fair amount of the writing was summarized and all the diagrams were pulled from our reports, credit to my teammates

Also, I consider this page to be incomplete, but sufficient. More details and edits to be added in time.